Microsoftの小規模推論モデル「Phi-4-reasoning」「Phi-4-reasoning-plus」「Phi-4-mini-reasoning」をllama.cppと呼ばれるCPU上で効率的に実行できるようにするためのソフトウェアで実行してみます。

llama.cpp

git clone

% git clone https://github.com/ggml-org/llama.cpp.git

Cloning into 'llama.cpp'...

remote: Enumerating objects: 49829, done.

remote: Counting objects: 100% (104/104), done.

remote: Compressing objects: 100% (74/74), done.

remote: Total 49829 (delta 55), reused 32 (delta 30), pack-reused 49725 (from 3)

Receiving objects: 100% (49829/49829), 103.73 MiB | 6.41 MiB/s, done.

Resolving deltas: 100% (35910/35910), done.cmake

% cd llama.cpp

% cmake -B build

-- The C compiler identification is AppleClang 14.0.3.14030022

-- The CXX compiler identification is AppleClang 14.0.3.14030022

-- Detecting C compiler ABI info

-- Detecting C compiler ABI info - done

-- Check for working C compiler: /Library/Developer/CommandLineTools/usr/bin/cc - skipped

-- Detecting C compile features

-- Detecting C compile features - done

-- Detecting CXX compiler ABI info

-- Detecting CXX compiler ABI info - done

-- Check for working CXX compiler: /Library/Developer/CommandLineTools/usr/bin/c++ - skipped

-- Detecting CXX compile features

-- Detecting CXX compile features - done

-- Found Git: /opt/homebrew/bin/git (found version "2.47.1")

-- Performing Test CMAKE_HAVE_LIBC_PTHREAD

-- Performing Test CMAKE_HAVE_LIBC_PTHREAD - Success

-- Found Threads: TRUE

-- Warning: ccache not found - consider installing it for faster compilation or disable this warning with GGML_CCACHE=OFF

-- CMAKE_SYSTEM_PROCESSOR: arm64

-- Including CPU backend

-- Accelerate framework found

-- Found OpenMP_C: -Xclang -fopenmp (found version "5.0")

-- Found OpenMP_CXX: -Xclang -fopenmp (found version "5.0")

-- Found OpenMP: TRUE (found version "5.0")

-- ARM detected

-- Performing Test GGML_COMPILER_SUPPORTS_FP16_FORMAT_I3E

-- Performing Test GGML_COMPILER_SUPPORTS_FP16_FORMAT_I3E - Failed

-- ARM -mcpu not found, -mcpu=native will be used

-- Performing Test GGML_MACHINE_SUPPORTS_dotprod

-- Performing Test GGML_MACHINE_SUPPORTS_dotprod - Success

-- Performing Test GGML_MACHINE_SUPPORTS_i8mm

-- Performing Test GGML_MACHINE_SUPPORTS_i8mm - Failed

-- Performing Test GGML_MACHINE_SUPPORTS_noi8mm

-- Performing Test GGML_MACHINE_SUPPORTS_noi8mm - Success

-- Performing Test GGML_MACHINE_SUPPORTS_sve

-- Performing Test GGML_MACHINE_SUPPORTS_sve - Failed

-- Performing Test GGML_MACHINE_SUPPORTS_nosve

-- Performing Test GGML_MACHINE_SUPPORTS_nosve - Success

-- Performing Test GGML_MACHINE_SUPPORTS_sme

-- Performing Test GGML_MACHINE_SUPPORTS_sme - Failed

-- Performing Test GGML_MACHINE_SUPPORTS_nosme

-- Performing Test GGML_MACHINE_SUPPORTS_nosme - Success

-- ARM feature DOTPROD enabled

-- ARM feature FMA enabled

-- ARM feature FP16_VECTOR_ARITHMETIC enabled

-- Adding CPU backend variant ggml-cpu: -mcpu=native+dotprod+noi8mm+nosve+nosme

-- Looking for dgemm_

-- Looking for dgemm_ - found

-- Found BLAS: /Library/Developer/CommandLineTools/SDKs/MacOSX13.3.sdk/System/Library/Frameworks/Accelerate.framework

-- BLAS found, Libraries: /Library/Developer/CommandLineTools/SDKs/MacOSX13.3.sdk/System/Library/Frameworks/Accelerate.framework

-- BLAS found, Includes:

-- Including BLAS backend

-- Metal framework found

-- The ASM compiler identification is AppleClang

-- Found assembler: /Library/Developer/CommandLineTools/usr/bin/cc

-- Including METAL backend

-- Found CURL: /Library/Developer/CommandLineTools/SDKs/MacOSX13.3.sdk/usr/lib/libcurl.tbd (found version "7.87.0")

-- Configuring done (7.1s)

-- Generating done (0.6s)

-- Build files have been written to: /Users/admin/llama.cpp/buildcmake build

% cmake --build build --config Release

% cmake --build build --config Release

[ 1%] Building C object ggml/src/CMakeFiles/ggml-base.dir/ggml.c.o

[ 1%] Building C object ggml/src/CMakeFiles/ggml-base.dir/ggml-alloc.c.o

[ 1%] Building CXX object ggml/src/CMakeFiles/ggml-base.dir/ggml-backend.cpp.o

[100%] Building CXX object pocs/vdot/CMakeFiles/llama-q8dot.dir/q8dot.cpp.o

[100%] Linking CXX executable ../../bin/llama-q8dot

[100%] Built target llama-q8dotPhi-4-reasoning

phi-4のモデル(gguf版)を以下のURLからダウンロードします

unsloth/Phi-4-reasoning-GGUF · Hugging Face

We’re on a journey to advance and democratize artificial intelligence through open source and open science.

検証のために「phi-4-reasoning-Q4_1.gguf」をダウンロードしました

実行

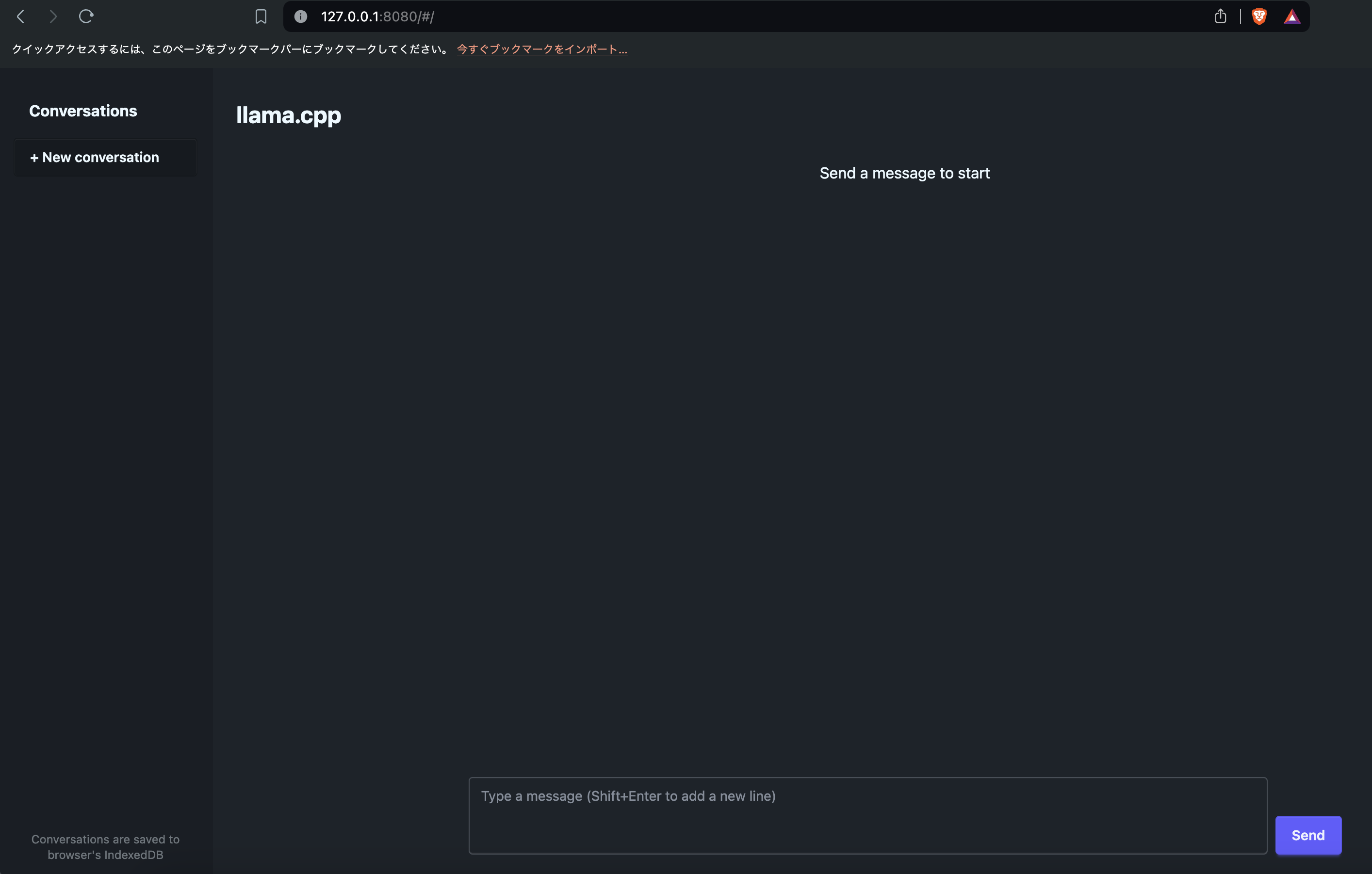

「./llama-server」はWebGUIを含めて起動します。「-m」オプションの後は、ダウンロードしたモデルのファイルパスを入れてください

% cd build/bin

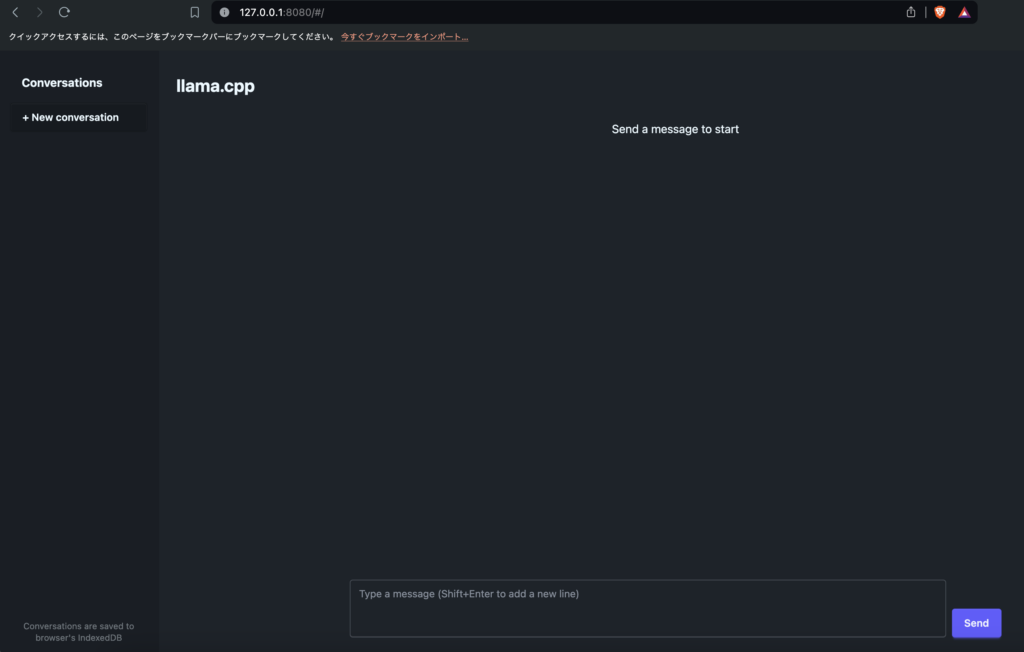

% ./llama-server -m /Users/ユーザ名/Downloads/phi-4-reasoning-Q4_1.ggufプログラムを実行したら「http://127.0.0.1:8080」をブラウザに入力してアクセスしてください。

以下の画面が表示されるので、「Type a message」で質問するとPhi-4が回答します

コメント